FineTuning

Enhance medical reasoning with AI fine-tuning.

FineTuning is an advanced project focused on enhancing medical reasoning capabilities using the DeepSeek-R1-Distill-Llama-8B model. This project employs Low-Rank Adaptation (LoRA) and the Unsloth framework to fine-tune the model on medical reasoning tasks. The implementation is designed to generate structured medical answers with chain-of-thought explanations, making it a valuable tool for clinical applications.

Key Features

Advanced Medical Reasoning: Fine-tuned on the FreedomIntelligence medical-o1-reasoning-SFT dataset for enhanced clinical reasoning.

Efficient Fine-Tuning: Utilizes LoRA adapters for parameter-efficient training, ensuring optimal performance with minimal resource usage.

Chain-of-Thought Responses: Capable of generating structured medical answers with detailed reasoning traces.

Quantized Training: Implements 4-bit quantization for improved memory efficiency.

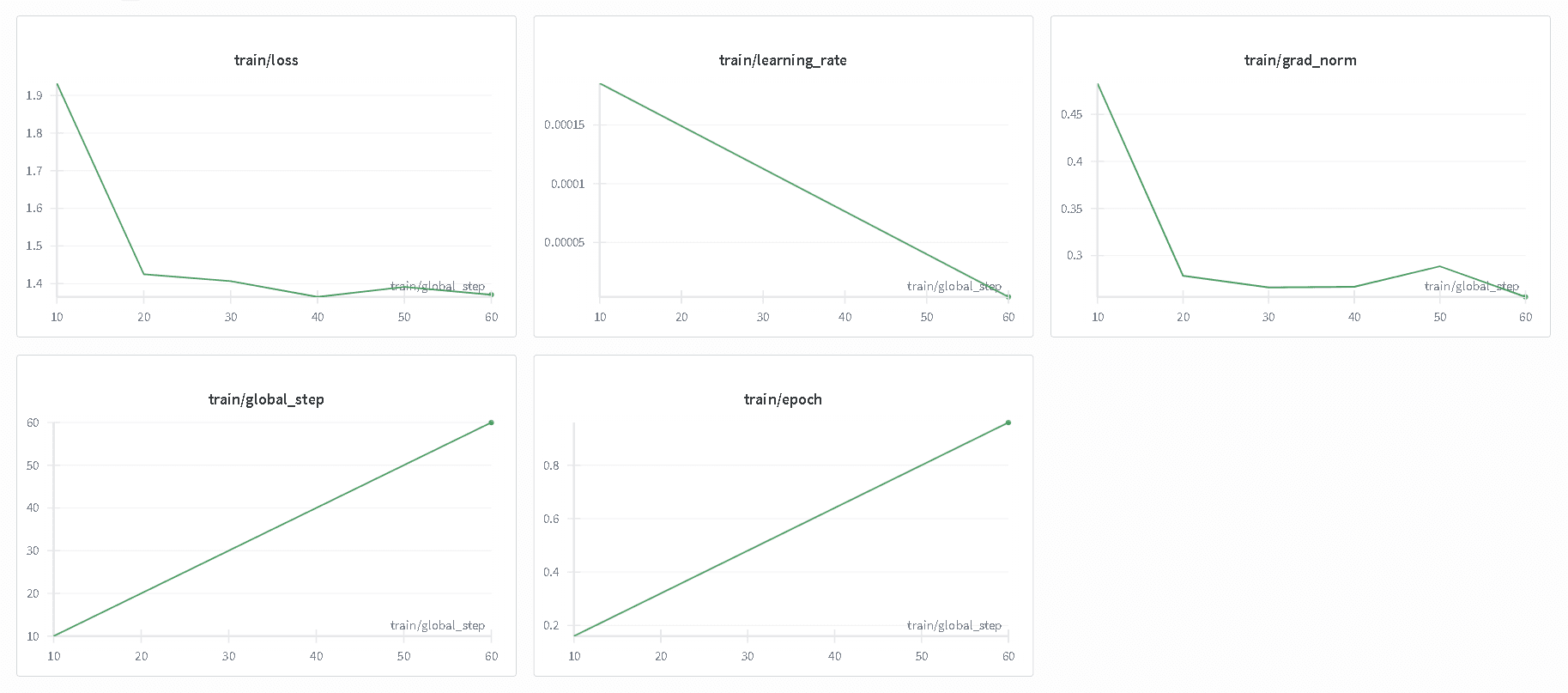

Experiment Tracking: Integrated with Weights & Biases (WandB) for comprehensive monitoring and analysis.

Hugging Face Integration: The model is hosted and accessible via the Hugging Face Hub, facilitating easy deployment and testing.

Inference Scripts: Provides ready-to-use scripts for testing and deployment, streamlining the integration process.

Technical Requirements

Hardware: Requires an NVIDIA GPU with CUDA support (recommended: 16GB+ VRAM) and 16GB+ system RAM.

Software: Compatible with Python 3.8 or higher, CUDA 11.8 or higher, and Git for repository management.

This project is open source and licensed under the MIT License, encouraging contributions and collaboration from the community.

Built with